Hey, I'm Evan! 👋🏼

I am a computer science Ph.D. student at MIT. My research focuses on understanding what deep neural networks learn from large quantities of data: what concepts are encoded in their features, and how can we communicate the network's learned algorithm to humans? I am extremely fortunate to be advised by Jacob Andreas and to work closely with Antonio Torralba.

Before graduate school, I earned my B.S. in computer science and mathematics from the University of Wisconsin-Madison. There, I developed a natural language interface for the educational programming environment Blockly and studied how to synthesize example programs for students using a noisy, hand-drawn sketch as a specification.

I also worked for Google as a software engineer and contributed to Chromium.

Research

We present a procedure to automatically generate natural language descriptions of neurons in computer vision models. These generated descriptions support important interpretability applications: we use them to analyze neuron importance, identify adversarial vulnerabilities, audit for unexpected features, and edit out spurious correlations.

GANs sometimes encode visual concepts in their latent space as linear directions. We construct a visual concept vocabulary for pretrained GANs, consisting of latent directions and free-form language descriptions of the changes they induce. We then distil the vocabulary into simpler, one-word visual concepts (e.g., snow or clouds).

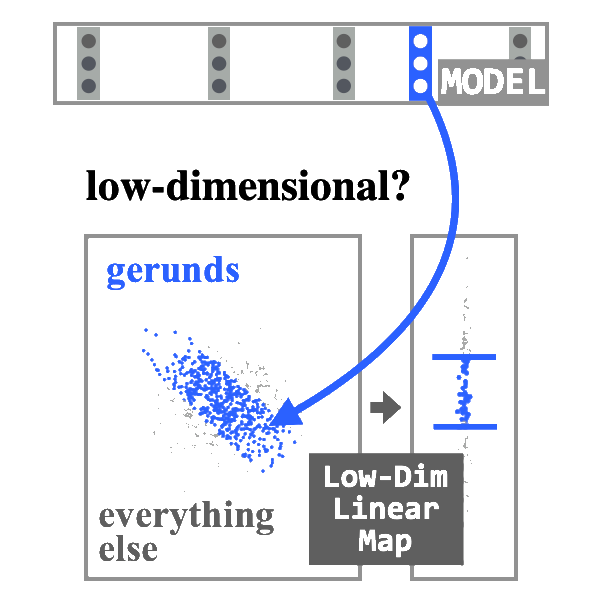

How do word representations geometrically encode linguistic abstractions like part of speech? We find that many linguistic features are encoded in low-dimensional subspaces of contextual word representation spaces, and these subspaces can causally influence model predictions.

Teaching

I had the pleasure of mentoring an MSRP summer intern on a research project. She developed a language-based image editing tool for images generated by GANs.

MIT’s primary NLP course, typically taken after a first course in ML. I wrote homework assignments, planned recitations, and led weekly office hours.

For three years, I tutored underrepresented students in UW-Madison engineering programs on introductory computer science and math classes. I also developed tutoring software to support the tutoring by request and drop-in tutoring services.